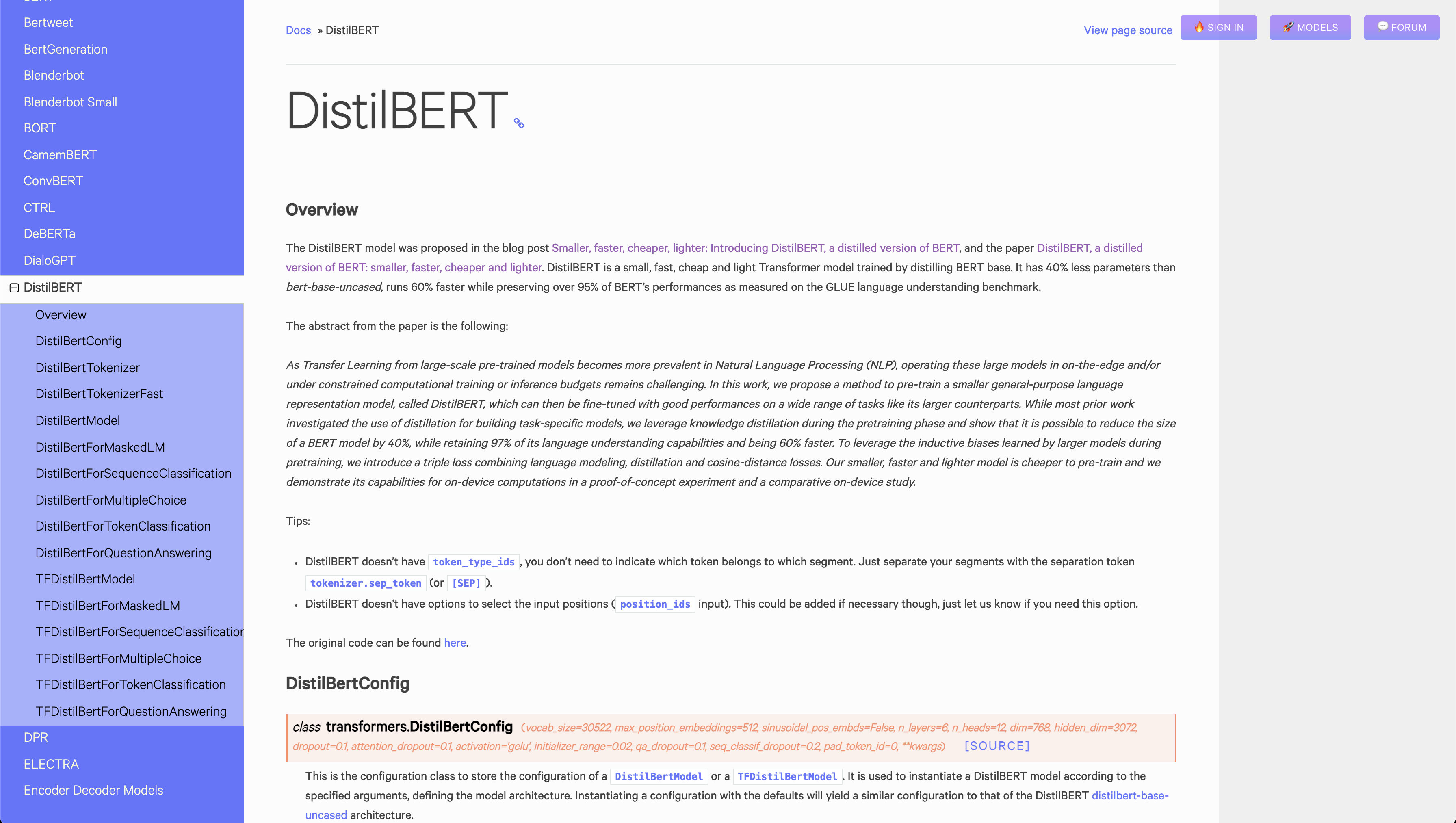

DistilBERT

A a distilled version of BERT: smaller, faster, cheaper and lighter

About DistilBERT

DistilBERT is a modified version of BERT, a Transformer model, that has been designed to be smaller, faster, cheaper and lighter. The model is able to retain over 95% of BERT’s performance as measured on the GLUE language understanding benchmark while having 40% fewer parameters and being 60% faster. This was achieved by leveraging knowledge distillation during the pre-training phase and introducing a triple loss combining language modeling, distillation and cosine-distance losses.

The potential of DistilBERT for on-device computations has been demonstrated in a proof-of-concept experiment and a comparative on-device study.

Created by https://huggingface.co/

DistilBERT screenshots

EA Chat GPT-3

EA Chat GPT-3