Website

Website

Category

GPT-3 Alternative Large Language Models (LLMs)Next app

Chinese LLaMA & Alpaca LLMsChinchilla by DeepMind

A GPT-3 rival by Deepmind

About Chinchilla by DeepMind

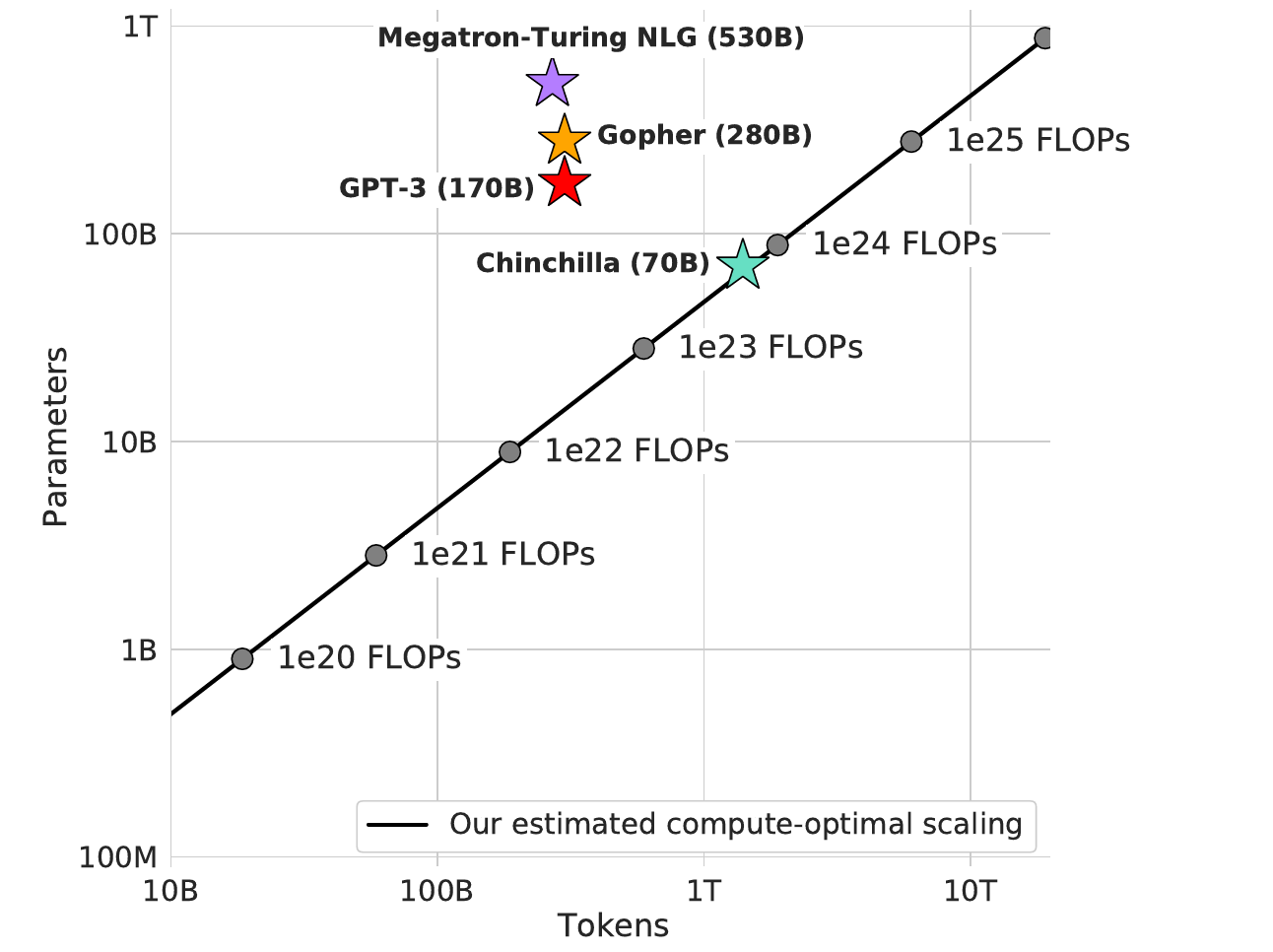

DeepMind researchers have presented a new model of prediction optimized for computing, called Chinchilla, which acts with the same amount of computing power as Gopher but with 70 billion parameters and 4 times more data.

Chinchilla has demonstrated a performance superior to Gopher (280B), GPT-3 (175B), Jurassic-1 (178B) and Megatron-Turing NLG (530B) in a wide range of downstream tests. It requires significantly less computing for fine-tuning and inference, making downstream use much easier.

Chinchilla has shown an average accuracy of 67.5%, the best result so far, on the MMLU benchmark, a 7% improvement compared to Gopher.

The prevailing trend in the training of large language models has been to increase their size without increasing the number of tokens used for training. The largest dense transformer, MT-NLG 530B, is now more than 3 times larger than GPT-3's 170 billion parameters.

Source: https://analyticsindiamag.com/deepmind-launches-gpt-3-rival-chinchilla/

Chinchilla by DeepMind screenshots

EA Chat GPT-3

EA Chat GPT-3